Hasan Ahmad; September 16th, 2020

This week in the Present column: a special write up on the emerging field of “neuroethics”.

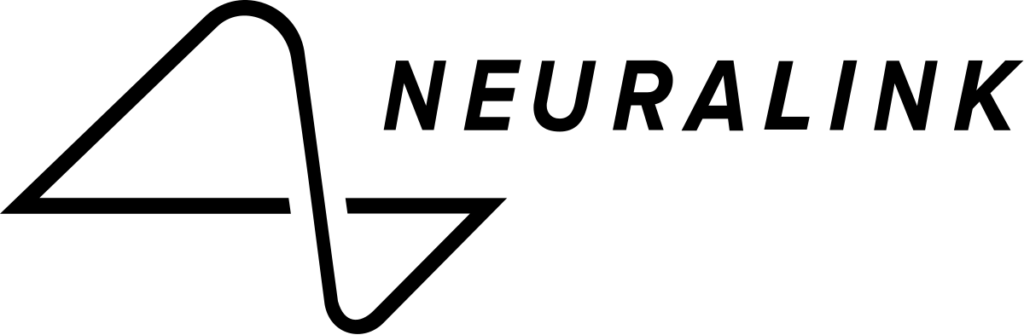

Neuralink’s new brain machine interface, a project funded and organized by Elon Musk has stirred up a great deal of controversy, and is representative of the issues surrounding the area of “neuroethics”. Neuralink has developed this device for many reasons, including helping people deal with neurological conditions, aiding those who have lost the ability to control their limbs, and potentially to create a pathway to more advanced artificial intelligence systems. As engineers continue to push the boundaries of medical technology, the lines between human and machine become blurred, and crossing them into dangerous territory may become inevitable.

The primary ethical problems arise when considering the negative uses of these devices. Rather than a noble goal of helping someone regain limb control, what if an organization, such as the military, proposed to use it for interrogation? If the technology becomes advanced enough, would it be morally acceptable for the military to use these devices to extract information from their enemies? What about private groups? Could companies access their employees’ minds if this technology becomes as widespread as Musk desires? Using this technology for truth detection, memory analysis, and other relatively simple neurological operations is not too far away in the future. In a few years, these questions will be staring us in the face.

Image Credit

Numerous innovations throughout history–created for helpful purposes–have been weaponized in one way or another. Take the internet, for example. Originally created to enhance communication, now its applications include manipulating people through social media warfare, targeting fundamental infrastructure like banks and the power grid, and even potentially hacking elections. Everything from computers, to cars, to nuclear energy can and has been weaponized in one way or another. Neurotechnology will almost certainly join this trend.

Stopping innovations in neurotech isn’t feasible. For some, it’s needed, such as those who have lost the use of their body parts. That’s why it’s crucial to discuss the ethics of this technology early on. People need to learn the dangers and risks of this technology and rigidly define who may need it. Otherwise, the science fiction questions asked above may come back to haunt us.

Post inspired by this University of Washington Article: https://www.washington.edu/boundless/neuroethics/